Hybrid Quantum Long-Short Term Memory NLP Example

The following example is adapted from the official documentation of pytorch, with the purpose of comparing the results of pytorch’s classical LSTM and our hybrid quantum implementation.

In brief, starting from an input sequence \(w_1\), …, \(w_i \in \mathcal{V}\) and a tag series \(\{y_j\}_{j=1,...i} \in \mathcal{T}\), being \(\mathcal{V}\) our vocabulary and \(\mathcal{T}\) our tag set, the model outputs a prediction \(\hat{y1}, ..., \hat{y_M} \in \mathcal{T}\) applying the following prediction rule

\begin{equation} \hat{y_i} = argmax_{j} \ log \ Softmax(Ah_i + b) \end{equation}

Please have a look at pytorch’s official documentation for further details.

[1]:

!pip install qlearnkit['pennylane']

!pip install matplotlib

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: qlearnkit[pennylane] in /home/mspronesti/Desktop/qlearnkit (0.2.1.dev6+g4c4fa04.d20220927)

Requirement already satisfied: qiskit-terra==0.20.0 in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (0.20.0)

Requirement already satisfied: qiskit-aer>=0.10.3 in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (0.10.4)

Requirement already satisfied: qiskit-machine-learning>=0.3.1 in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (0.3.1)

Requirement already satisfied: scikit-learn==1.0.2 in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (1.0.2)

Requirement already satisfied: scipy==1.7.3 in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (1.7.3)

Requirement already satisfied: numpy==1.22.0 in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (1.22.0)

Requirement already satisfied: pennylane in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (0.20.0)

Requirement already satisfied: torch in /home/mspronesti/.local/lib/python3.8/site-packages (from qlearnkit[pennylane]) (1.10.2)

Requirement already satisfied: retworkx>=0.11.0 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (0.11.0)

Requirement already satisfied: psutil>=5 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (5.8.0)

Requirement already satisfied: stevedore>=3.0.0 in /usr/local/lib/python3.8/dist-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (3.5.0)

Requirement already satisfied: symengine>=0.9 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (0.9.2)

Requirement already satisfied: python-dateutil>=2.8.0 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (2.8.2)

Requirement already satisfied: tweedledum<2.0,>=1.1 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (1.1.1)

Requirement already satisfied: python-constraint>=1.4 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (1.4.0)

Requirement already satisfied: sympy>=1.3 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (1.9)

Requirement already satisfied: dill>=0.3 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (0.3.5.1)

Requirement already satisfied: ply>=3.10 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-terra==0.20.0->qlearnkit[pennylane]) (3.11)

Requirement already satisfied: joblib>=0.11 in /home/mspronesti/.local/lib/python3.8/site-packages (from scikit-learn==1.0.2->qlearnkit[pennylane]) (1.1.0)

Requirement already satisfied: threadpoolctl>=2.0.0 in /home/mspronesti/.local/lib/python3.8/site-packages (from scikit-learn==1.0.2->qlearnkit[pennylane]) (3.0.0)

Requirement already satisfied: fastdtw in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-machine-learning>=0.3.1->qlearnkit[pennylane]) (0.3.4)

Requirement already satisfied: setuptools>=40.1.0 in /home/mspronesti/.local/lib/python3.8/site-packages (from qiskit-machine-learning>=0.3.1->qlearnkit[pennylane]) (59.6.0)

Requirement already satisfied: networkx in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (2.6.3)

Requirement already satisfied: toml in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (0.10.2)

Requirement already satisfied: autoray in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (0.2.5)

Requirement already satisfied: cachetools in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (4.2.4)

Requirement already satisfied: semantic-version==2.6 in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (2.6.0)

Requirement already satisfied: appdirs in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (1.4.4)

Requirement already satisfied: autograd in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (1.3)

Requirement already satisfied: pennylane-lightning>=0.18 in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane->qlearnkit[pennylane]) (0.20.2)

Requirement already satisfied: typing-extensions in /home/mspronesti/.local/lib/python3.8/site-packages (from torch->qlearnkit[pennylane]) (4.3.0)

Requirement already satisfied: ninja in /home/mspronesti/.local/lib/python3.8/site-packages (from pennylane-lightning>=0.18->pennylane->qlearnkit[pennylane]) (1.10.2.3)

Requirement already satisfied: six>=1.5 in /home/mspronesti/.local/lib/python3.8/site-packages (from python-dateutil>=2.8.0->qiskit-terra==0.20.0->qlearnkit[pennylane]) (1.16.0)

Requirement already satisfied: pbr!=2.1.0,>=2.0.0 in /usr/local/lib/python3.8/dist-packages (from stevedore>=3.0.0->qiskit-terra==0.20.0->qlearnkit[pennylane]) (5.8.0)

Requirement already satisfied: mpmath>=0.19 in /home/mspronesti/.local/lib/python3.8/site-packages (from sympy>=1.3->qiskit-terra==0.20.0->qlearnkit[pennylane]) (1.2.1)

Requirement already satisfied: future>=0.15.2 in /home/mspronesti/.local/lib/python3.8/site-packages (from autograd->pennylane->qlearnkit[pennylane]) (0.18.2)

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: matplotlib in /home/mspronesti/.local/lib/python3.8/site-packages (3.4.3)

Requirement already satisfied: kiwisolver>=1.0.1 in /home/mspronesti/.local/lib/python3.8/site-packages (from matplotlib) (1.3.2)

Requirement already satisfied: python-dateutil>=2.7 in /home/mspronesti/.local/lib/python3.8/site-packages (from matplotlib) (2.8.2)

Requirement already satisfied: pyparsing>=2.2.1 in /home/mspronesti/.local/lib/python3.8/site-packages (from matplotlib) (2.4.7)

Requirement already satisfied: cycler>=0.10 in /home/mspronesti/.local/lib/python3.8/site-packages (from matplotlib) (0.10.0)

Requirement already satisfied: numpy>=1.16 in /home/mspronesti/.local/lib/python3.8/site-packages (from matplotlib) (1.22.0)

Requirement already satisfied: pillow>=6.2.0 in /usr/lib/python3/dist-packages (from matplotlib) (7.0.0)

Requirement already satisfied: six in /home/mspronesti/.local/lib/python3.8/site-packages (from cycler>=0.10->matplotlib) (1.16.0)

[2]:

import qlearnkit as ql

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from matplotlib import pyplot as plt

Let’s first prepare our data

[3]:

tag_to_ix = {"DET": 0, "NN": 1, "V": 2} # Assign each tag with a unique index

ix_to_tag = {i: k for k, i in tag_to_ix.items()}

def prepare_sequence(seq, to_ix):

idxs = [to_ix[w] for w in seq]

return torch.tensor(idxs, dtype=torch.long)

training_data = [

# Tags are: DET - determiner; NN - noun; V - verb

# For example, the word "The" is a determiner

("The dog ate the apple".split(), ["DET", "NN", "V", "DET", "NN"]),

("Everybody read that book".split(), ["NN", "V", "DET", "NN"])

]

word_to_ix = {}

# For each words-list (sentence) and tags-list in each tuple of training_data

for sent, tags in training_data:

for word in sent:

if word not in word_to_ix: # word has not been assigned an index yet

word_to_ix[word] = len(word_to_ix) # Assign each word with a unique index

print(f"Vocabulary: {word_to_ix}")

print(f"Entities: {ix_to_tag}")

Vocabulary: {'The': 0, 'dog': 1, 'ate': 2, 'the': 3, 'apple': 4, 'Everybody': 5, 'read': 6, 'that': 7, 'book': 8}

Entities: {0: 'DET', 1: 'NN', 2: 'V'}

Now Let’s create the Tagger model embedding our LSTM

[4]:

class LSTMTagger(nn.Module):

def __init__(self,

model,

embedding_dim,

hidden_dim,

vocab_size,

tagset_size):

super(LSTMTagger, self).__init__()

self.hidden_dim = hidden_dim

self.word_embeddings = nn.Embedding(vocab_size, embedding_dim)

self.model = model

# The linear layer that maps from hidden state space to tag space

self.hidden2tag = nn.Linear(hidden_dim, tagset_size)

def forward(self, sentence):

embeds = self.word_embeddings(sentence)

lstm_out, _ = self.model(embeds.view(len(sentence), 1, -1))

tag_logits = self.hidden2tag(lstm_out.view(len(sentence), -1))

tag_scores = F.log_softmax(tag_logits, dim=1)

return tag_scores

Let’s set (by hand) some hyperparameters

[5]:

embedding_dim = 8

hidden_dim = 6

n_layers = 1

n_qubits = 4

n_epochs = 300

device = 'default.qubit'

Let’s also create an helper trainer that accepting our hyperparameters and a model, to simply call it with our two implementations

[6]:

def trainer(lstm_model, embedding_dim, hidden_dim,

n_epochs, model_label):

# the LSTM Tagger Model

model = LSTMTagger(lstm_model,

embedding_dim,

hidden_dim,

vocab_size=len(word_to_ix),

tagset_size=len(tag_to_ix))

# loss function and Stochastic Gradient Descend

# as optimizers

loss_function = nn.NLLLoss()

optimizer = optim.SGD(model.parameters(), lr=0.1)

history = {

'loss': [],

'acc': []

}

for epoch in range(n_epochs):

losses = []

preds = []

targets = []

for sentence, tags in training_data:

# Step 1. Remember that Pytorch accumulates gradients.

# We need to clear them out before each instance

model.zero_grad()

# Step 2. Get our inputs ready for the network, that is, turn them into

# Tensors of word indices.

sentence_in = prepare_sequence(sentence, word_to_ix)

labels = prepare_sequence(tags, tag_to_ix)

# Step 3. Run our forward pass.

tag_scores = model(sentence_in)

# Step 4. Compute the loss, gradients, and update the parameters by

# calling optimizer.step()

loss = loss_function(tag_scores, labels)

loss.backward()

optimizer.step()

losses.append(float(loss))

probs = torch.softmax(tag_scores, dim=-1)

preds.append(probs.argmax(dim=-1))

targets.append(labels)

avg_loss = np.mean(losses)

history['loss'].append(avg_loss)

# print("preds", preds)

preds = torch.cat(preds)

targets = torch.cat(targets)

corrects = (preds == targets)

accuracy = corrects.sum().float() / float(targets.size(0))

history['acc'].append(accuracy)

print(f"Epoch {epoch + 1} / {n_epochs}: Loss = {avg_loss:.3f} Acc = {accuracy:.2f}")

with torch.no_grad():

input_sentence = training_data[0][0]

labels = training_data[0][1]

inputs = prepare_sequence(input_sentence, word_to_ix)

tag_scores = model(inputs)

tag_ids = torch.argmax(tag_scores, dim=1).numpy()

tag_labels = [ix_to_tag[k] for k in tag_ids]

print(f"Sentence: {input_sentence}")

print(f"Labels: {labels}")

print(f"Predicted: {tag_labels}")

fig, ax1 = plt.subplots()

ax1.set_xlabel("Epoch")

ax1.set_ylabel("Loss")

ax1.plot(history['loss'], label=f"{model_label} Loss")

ax2 = ax1.twinx()

ax2.set_ylabel("Accuracy")

ax2.plot(history['acc'], label=f"{model_label} LSTM Accuracy", color='tab:red')

plt.title("Part-of-Speech Tagger Training")

plt.ylim(0., 1.5)

plt.legend(loc="upper right")

plt.show()

Let’s create our Quantum LSTM from qleanrkit

[7]:

import qlearnkit.nn as qnn

qlstm = qnn.QLongShortTermMemory(

embedding_dim,

hidden_dim,

n_layers,

n_qubits=n_qubits,

device=device

)

Let’s have a look at its (quantum) architecture

NOTICE: ignore the following code per se, it has nothing to do with the actual usage of qlearnkit’s QLSTM. It’s just here to cope with the required parameters of qml.draw

[8]:

# random values to feed into the QNode

inputs = torch.rand(embedding_dim, n_qubits)

weights = torch.rand(n_layers, n_qubits, 3)

import pennylane as qml

dev = qml.device(device, wires=n_qubits)

circ = qml.QNode(qlstm._construct_vqc, dev)

print(qml.draw(circ, expansion_strategy='device', show_all_wires=True)(inputs, weights))

0: ──H──RY(M0)──RZ(M4)──╭C─────────────────────────────╭X──RX(0.642)──RY(0.351)──RZ(0.687)──┤ ⟨Z⟩

1: ──H──RY(M1)──RZ(M5)──╰X──╭C───RX(0.138)──RY(0.664)──│───RZ(0.154)────────────────────────┤ ⟨Z⟩

2: ──H──RY(M2)──RZ(M6)──────╰X──╭C──────────RX(0.493)──│───RY(0.593)──RZ(0.452)─────────────┤ ⟨Z⟩

3: ──H──RY(M3)──RZ(M7)──────────╰X─────────────────────╰C──RX(0.968)──RY(0.899)──RZ(0.434)──┤ ⟨Z⟩

M0 =

tensor([0.4410, 0.2851, 0.0922, 0.2085, 0.4189, 0.5568, 0.3468, 0.4773])

M1 =

tensor([0.5397, 0.3276, 0.0610, 0.7491, 0.2016, 0.1892, 0.4293, 0.3255])

M2 =

tensor([0.5904, 0.5577, 0.2555, 0.6175, 0.4668, 0.4356, 0.3120, 0.4006])

M3 =

tensor([0.6520, 0.0839, 0.3924, 0.0972, 0.4129, 0.7706, 0.1809, 0.6805])

M4 =

tensor([0.2192, 0.0857, 0.0085, 0.0447, 0.1958, 0.3697, 0.1299, 0.2614])

M5 =

tensor([0.3446, 0.1150, 0.0037, 0.7129, 0.0418, 0.0367, 0.2065, 0.1134])

M6 =

tensor([0.4221, 0.3711, 0.0681, 0.4672, 0.2487, 0.2133, 0.1037, 0.1775])

M7 =

tensor([0.5277, 0.0071, 0.1696, 0.0095, 0.1896, 0.7558, 0.0334, 0.5800])

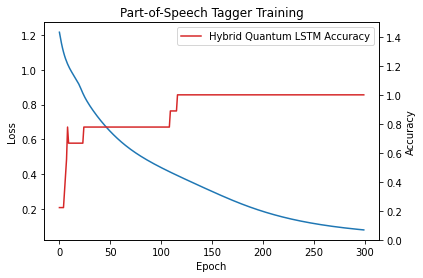

Now we can train our hybrid-quantum model

[10]:

trainer(qlstm, embedding_dim, hidden_dim, n_epochs, model_label='Hybrid Quantum')

Epoch 1 / 300: Loss = 1.216 Acc = 0.22

Epoch 2 / 300: Loss = 1.183 Acc = 0.22

Epoch 3 / 300: Loss = 1.153 Acc = 0.22

Epoch 4 / 300: Loss = 1.126 Acc = 0.22

Epoch 5 / 300: Loss = 1.103 Acc = 0.22

Epoch 6 / 300: Loss = 1.082 Acc = 0.33

Epoch 7 / 300: Loss = 1.064 Acc = 0.44

Epoch 8 / 300: Loss = 1.048 Acc = 0.56

Epoch 9 / 300: Loss = 1.034 Acc = 0.78

Epoch 10 / 300: Loss = 1.021 Acc = 0.67

Epoch 11 / 300: Loss = 1.009 Acc = 0.67

Epoch 12 / 300: Loss = 0.998 Acc = 0.67

Epoch 13 / 300: Loss = 0.988 Acc = 0.67

Epoch 14 / 300: Loss = 0.978 Acc = 0.67

Epoch 15 / 300: Loss = 0.969 Acc = 0.67

Epoch 16 / 300: Loss = 0.959 Acc = 0.67

Epoch 17 / 300: Loss = 0.950 Acc = 0.67

Epoch 18 / 300: Loss = 0.940 Acc = 0.67

Epoch 19 / 300: Loss = 0.930 Acc = 0.67

Epoch 20 / 300: Loss = 0.918 Acc = 0.67

Epoch 21 / 300: Loss = 0.906 Acc = 0.67

Epoch 22 / 300: Loss = 0.893 Acc = 0.67

Epoch 23 / 300: Loss = 0.879 Acc = 0.67

Epoch 24 / 300: Loss = 0.865 Acc = 0.67

Epoch 25 / 300: Loss = 0.853 Acc = 0.78

Epoch 26 / 300: Loss = 0.841 Acc = 0.78

Epoch 27 / 300: Loss = 0.830 Acc = 0.78

Epoch 28 / 300: Loss = 0.820 Acc = 0.78

Epoch 29 / 300: Loss = 0.811 Acc = 0.78

Epoch 30 / 300: Loss = 0.801 Acc = 0.78

Epoch 31 / 300: Loss = 0.792 Acc = 0.78

Epoch 32 / 300: Loss = 0.783 Acc = 0.78

Epoch 33 / 300: Loss = 0.775 Acc = 0.78

Epoch 34 / 300: Loss = 0.766 Acc = 0.78

Epoch 35 / 300: Loss = 0.758 Acc = 0.78

Epoch 36 / 300: Loss = 0.750 Acc = 0.78

Epoch 37 / 300: Loss = 0.742 Acc = 0.78

Epoch 38 / 300: Loss = 0.734 Acc = 0.78

Epoch 39 / 300: Loss = 0.726 Acc = 0.78

Epoch 40 / 300: Loss = 0.719 Acc = 0.78

Epoch 41 / 300: Loss = 0.712 Acc = 0.78

Epoch 42 / 300: Loss = 0.704 Acc = 0.78

Epoch 43 / 300: Loss = 0.697 Acc = 0.78

Epoch 44 / 300: Loss = 0.690 Acc = 0.78

Epoch 45 / 300: Loss = 0.683 Acc = 0.78

Epoch 46 / 300: Loss = 0.677 Acc = 0.78

Epoch 47 / 300: Loss = 0.670 Acc = 0.78

Epoch 48 / 300: Loss = 0.664 Acc = 0.78

Epoch 49 / 300: Loss = 0.657 Acc = 0.78

Epoch 50 / 300: Loss = 0.651 Acc = 0.78

Epoch 51 / 300: Loss = 0.645 Acc = 0.78

Epoch 52 / 300: Loss = 0.639 Acc = 0.78

Epoch 53 / 300: Loss = 0.633 Acc = 0.78

Epoch 54 / 300: Loss = 0.627 Acc = 0.78

Epoch 55 / 300: Loss = 0.621 Acc = 0.78

Epoch 56 / 300: Loss = 0.616 Acc = 0.78

Epoch 57 / 300: Loss = 0.610 Acc = 0.78

Epoch 58 / 300: Loss = 0.605 Acc = 0.78

Epoch 59 / 300: Loss = 0.599 Acc = 0.78

Epoch 60 / 300: Loss = 0.594 Acc = 0.78

Epoch 61 / 300: Loss = 0.589 Acc = 0.78

Epoch 62 / 300: Loss = 0.584 Acc = 0.78

Epoch 63 / 300: Loss = 0.579 Acc = 0.78

Epoch 64 / 300: Loss = 0.574 Acc = 0.78

Epoch 65 / 300: Loss = 0.569 Acc = 0.78

Epoch 66 / 300: Loss = 0.564 Acc = 0.78

Epoch 67 / 300: Loss = 0.560 Acc = 0.78

Epoch 68 / 300: Loss = 0.555 Acc = 0.78

Epoch 69 / 300: Loss = 0.551 Acc = 0.78

Epoch 70 / 300: Loss = 0.546 Acc = 0.78

Epoch 71 / 300: Loss = 0.542 Acc = 0.78

Epoch 72 / 300: Loss = 0.538 Acc = 0.78

Epoch 73 / 300: Loss = 0.533 Acc = 0.78

Epoch 74 / 300: Loss = 0.529 Acc = 0.78

Epoch 75 / 300: Loss = 0.525 Acc = 0.78

Epoch 76 / 300: Loss = 0.521 Acc = 0.78

Epoch 77 / 300: Loss = 0.517 Acc = 0.78

Epoch 78 / 300: Loss = 0.513 Acc = 0.78

Epoch 79 / 300: Loss = 0.510 Acc = 0.78

Epoch 80 / 300: Loss = 0.506 Acc = 0.78

Epoch 81 / 300: Loss = 0.502 Acc = 0.78

Epoch 82 / 300: Loss = 0.499 Acc = 0.78

Epoch 83 / 300: Loss = 0.495 Acc = 0.78

Epoch 84 / 300: Loss = 0.491 Acc = 0.78

Epoch 85 / 300: Loss = 0.488 Acc = 0.78

Epoch 86 / 300: Loss = 0.485 Acc = 0.78

Epoch 87 / 300: Loss = 0.481 Acc = 0.78

Epoch 88 / 300: Loss = 0.478 Acc = 0.78

Epoch 89 / 300: Loss = 0.474 Acc = 0.78

Epoch 90 / 300: Loss = 0.471 Acc = 0.78

Epoch 91 / 300: Loss = 0.468 Acc = 0.78

Epoch 92 / 300: Loss = 0.465 Acc = 0.78

Epoch 93 / 300: Loss = 0.461 Acc = 0.78

Epoch 94 / 300: Loss = 0.458 Acc = 0.78

Epoch 95 / 300: Loss = 0.455 Acc = 0.78

Epoch 96 / 300: Loss = 0.452 Acc = 0.78

Epoch 97 / 300: Loss = 0.449 Acc = 0.78

Epoch 98 / 300: Loss = 0.446 Acc = 0.78

Epoch 99 / 300: Loss = 0.443 Acc = 0.78

Epoch 100 / 300: Loss = 0.440 Acc = 0.78

Epoch 101 / 300: Loss = 0.437 Acc = 0.78

Epoch 102 / 300: Loss = 0.434 Acc = 0.78

Epoch 103 / 300: Loss = 0.431 Acc = 0.78

Epoch 104 / 300: Loss = 0.428 Acc = 0.78

Epoch 105 / 300: Loss = 0.425 Acc = 0.78

Epoch 106 / 300: Loss = 0.422 Acc = 0.78

Epoch 107 / 300: Loss = 0.419 Acc = 0.78

Epoch 108 / 300: Loss = 0.416 Acc = 0.78

Epoch 109 / 300: Loss = 0.413 Acc = 0.78

Epoch 110 / 300: Loss = 0.411 Acc = 0.89

Epoch 111 / 300: Loss = 0.408 Acc = 0.89

Epoch 112 / 300: Loss = 0.405 Acc = 0.89

Epoch 113 / 300: Loss = 0.402 Acc = 0.89

Epoch 114 / 300: Loss = 0.399 Acc = 0.89

Epoch 115 / 300: Loss = 0.396 Acc = 0.89

Epoch 116 / 300: Loss = 0.394 Acc = 0.89

Epoch 117 / 300: Loss = 0.391 Acc = 1.00

Epoch 118 / 300: Loss = 0.388 Acc = 1.00

Epoch 119 / 300: Loss = 0.385 Acc = 1.00

Epoch 120 / 300: Loss = 0.383 Acc = 1.00

Epoch 121 / 300: Loss = 0.380 Acc = 1.00

Epoch 122 / 300: Loss = 0.377 Acc = 1.00

Epoch 123 / 300: Loss = 0.374 Acc = 1.00

Epoch 124 / 300: Loss = 0.372 Acc = 1.00

Epoch 125 / 300: Loss = 0.369 Acc = 1.00

Epoch 126 / 300: Loss = 0.366 Acc = 1.00

Epoch 127 / 300: Loss = 0.364 Acc = 1.00

Epoch 128 / 300: Loss = 0.361 Acc = 1.00

Epoch 129 / 300: Loss = 0.358 Acc = 1.00

Epoch 130 / 300: Loss = 0.355 Acc = 1.00

Epoch 131 / 300: Loss = 0.353 Acc = 1.00

Epoch 132 / 300: Loss = 0.350 Acc = 1.00

Epoch 133 / 300: Loss = 0.347 Acc = 1.00

Epoch 134 / 300: Loss = 0.345 Acc = 1.00

Epoch 135 / 300: Loss = 0.342 Acc = 1.00

Epoch 136 / 300: Loss = 0.339 Acc = 1.00

Epoch 137 / 300: Loss = 0.337 Acc = 1.00

Epoch 138 / 300: Loss = 0.334 Acc = 1.00

Epoch 139 / 300: Loss = 0.331 Acc = 1.00

Epoch 140 / 300: Loss = 0.329 Acc = 1.00

Epoch 141 / 300: Loss = 0.326 Acc = 1.00

Epoch 142 / 300: Loss = 0.323 Acc = 1.00

Epoch 143 / 300: Loss = 0.321 Acc = 1.00

Epoch 144 / 300: Loss = 0.318 Acc = 1.00

Epoch 145 / 300: Loss = 0.315 Acc = 1.00

Epoch 146 / 300: Loss = 0.313 Acc = 1.00

Epoch 147 / 300: Loss = 0.310 Acc = 1.00

Epoch 148 / 300: Loss = 0.307 Acc = 1.00

Epoch 149 / 300: Loss = 0.305 Acc = 1.00

Epoch 150 / 300: Loss = 0.302 Acc = 1.00

Epoch 151 / 300: Loss = 0.299 Acc = 1.00

Epoch 152 / 300: Loss = 0.297 Acc = 1.00

Epoch 153 / 300: Loss = 0.294 Acc = 1.00

Epoch 154 / 300: Loss = 0.292 Acc = 1.00

Epoch 155 / 300: Loss = 0.289 Acc = 1.00

Epoch 156 / 300: Loss = 0.286 Acc = 1.00

Epoch 157 / 300: Loss = 0.284 Acc = 1.00

Epoch 158 / 300: Loss = 0.281 Acc = 1.00

Epoch 159 / 300: Loss = 0.279 Acc = 1.00

Epoch 160 / 300: Loss = 0.276 Acc = 1.00

Epoch 161 / 300: Loss = 0.273 Acc = 1.00

Epoch 162 / 300: Loss = 0.271 Acc = 1.00

Epoch 163 / 300: Loss = 0.268 Acc = 1.00

Epoch 164 / 300: Loss = 0.266 Acc = 1.00

Epoch 165 / 300: Loss = 0.263 Acc = 1.00

Epoch 166 / 300: Loss = 0.261 Acc = 1.00

Epoch 167 / 300: Loss = 0.258 Acc = 1.00

Epoch 168 / 300: Loss = 0.256 Acc = 1.00

Epoch 169 / 300: Loss = 0.253 Acc = 1.00

Epoch 170 / 300: Loss = 0.251 Acc = 1.00

Epoch 171 / 300: Loss = 0.249 Acc = 1.00

Epoch 172 / 300: Loss = 0.246 Acc = 1.00

Epoch 173 / 300: Loss = 0.244 Acc = 1.00

Epoch 174 / 300: Loss = 0.241 Acc = 1.00

Epoch 175 / 300: Loss = 0.239 Acc = 1.00

Epoch 176 / 300: Loss = 0.237 Acc = 1.00

Epoch 177 / 300: Loss = 0.234 Acc = 1.00

Epoch 178 / 300: Loss = 0.232 Acc = 1.00

Epoch 179 / 300: Loss = 0.230 Acc = 1.00

Epoch 180 / 300: Loss = 0.227 Acc = 1.00

Epoch 181 / 300: Loss = 0.225 Acc = 1.00

Epoch 182 / 300: Loss = 0.223 Acc = 1.00

Epoch 183 / 300: Loss = 0.221 Acc = 1.00

Epoch 184 / 300: Loss = 0.219 Acc = 1.00

Epoch 185 / 300: Loss = 0.216 Acc = 1.00

Epoch 186 / 300: Loss = 0.214 Acc = 1.00

Epoch 187 / 300: Loss = 0.212 Acc = 1.00

Epoch 188 / 300: Loss = 0.210 Acc = 1.00

Epoch 189 / 300: Loss = 0.208 Acc = 1.00

Epoch 190 / 300: Loss = 0.206 Acc = 1.00

Epoch 191 / 300: Loss = 0.204 Acc = 1.00

Epoch 192 / 300: Loss = 0.202 Acc = 1.00

Epoch 193 / 300: Loss = 0.200 Acc = 1.00

Epoch 194 / 300: Loss = 0.198 Acc = 1.00

Epoch 195 / 300: Loss = 0.196 Acc = 1.00

Epoch 196 / 300: Loss = 0.194 Acc = 1.00

Epoch 197 / 300: Loss = 0.192 Acc = 1.00

Epoch 198 / 300: Loss = 0.190 Acc = 1.00

Epoch 199 / 300: Loss = 0.188 Acc = 1.00

Epoch 200 / 300: Loss = 0.186 Acc = 1.00

Epoch 201 / 300: Loss = 0.184 Acc = 1.00

Epoch 202 / 300: Loss = 0.183 Acc = 1.00

Epoch 203 / 300: Loss = 0.181 Acc = 1.00

Epoch 204 / 300: Loss = 0.179 Acc = 1.00

Epoch 205 / 300: Loss = 0.177 Acc = 1.00

Epoch 206 / 300: Loss = 0.175 Acc = 1.00

Epoch 207 / 300: Loss = 0.174 Acc = 1.00

Epoch 208 / 300: Loss = 0.172 Acc = 1.00

Epoch 209 / 300: Loss = 0.170 Acc = 1.00

Epoch 210 / 300: Loss = 0.169 Acc = 1.00

Epoch 211 / 300: Loss = 0.167 Acc = 1.00

Epoch 212 / 300: Loss = 0.165 Acc = 1.00

Epoch 213 / 300: Loss = 0.164 Acc = 1.00

Epoch 214 / 300: Loss = 0.162 Acc = 1.00

Epoch 215 / 300: Loss = 0.160 Acc = 1.00

Epoch 216 / 300: Loss = 0.159 Acc = 1.00

Epoch 217 / 300: Loss = 0.157 Acc = 1.00

Epoch 218 / 300: Loss = 0.156 Acc = 1.00

Epoch 219 / 300: Loss = 0.154 Acc = 1.00

Epoch 220 / 300: Loss = 0.153 Acc = 1.00

Epoch 221 / 300: Loss = 0.151 Acc = 1.00

Epoch 222 / 300: Loss = 0.150 Acc = 1.00

Epoch 223 / 300: Loss = 0.149 Acc = 1.00

Epoch 224 / 300: Loss = 0.147 Acc = 1.00

Epoch 225 / 300: Loss = 0.146 Acc = 1.00

Epoch 226 / 300: Loss = 0.144 Acc = 1.00

Epoch 227 / 300: Loss = 0.143 Acc = 1.00

Epoch 228 / 300: Loss = 0.142 Acc = 1.00

Epoch 229 / 300: Loss = 0.140 Acc = 1.00

Epoch 230 / 300: Loss = 0.139 Acc = 1.00

Epoch 231 / 300: Loss = 0.138 Acc = 1.00

Epoch 232 / 300: Loss = 0.136 Acc = 1.00

Epoch 233 / 300: Loss = 0.135 Acc = 1.00

Epoch 234 / 300: Loss = 0.134 Acc = 1.00

Epoch 235 / 300: Loss = 0.133 Acc = 1.00

Epoch 236 / 300: Loss = 0.131 Acc = 1.00

Epoch 237 / 300: Loss = 0.130 Acc = 1.00

Epoch 238 / 300: Loss = 0.129 Acc = 1.00

Epoch 239 / 300: Loss = 0.128 Acc = 1.00

Epoch 240 / 300: Loss = 0.127 Acc = 1.00

Epoch 241 / 300: Loss = 0.125 Acc = 1.00

Epoch 242 / 300: Loss = 0.124 Acc = 1.00

Epoch 243 / 300: Loss = 0.123 Acc = 1.00

Epoch 244 / 300: Loss = 0.122 Acc = 1.00

Epoch 245 / 300: Loss = 0.121 Acc = 1.00

Epoch 246 / 300: Loss = 0.120 Acc = 1.00

Epoch 247 / 300: Loss = 0.119 Acc = 1.00

Epoch 248 / 300: Loss = 0.118 Acc = 1.00

Epoch 249 / 300: Loss = 0.117 Acc = 1.00

Epoch 250 / 300: Loss = 0.116 Acc = 1.00

Epoch 251 / 300: Loss = 0.115 Acc = 1.00

Epoch 252 / 300: Loss = 0.114 Acc = 1.00

Epoch 253 / 300: Loss = 0.113 Acc = 1.00

Epoch 254 / 300: Loss = 0.112 Acc = 1.00

Epoch 255 / 300: Loss = 0.111 Acc = 1.00

Epoch 256 / 300: Loss = 0.110 Acc = 1.00

Epoch 257 / 300: Loss = 0.109 Acc = 1.00

Epoch 258 / 300: Loss = 0.108 Acc = 1.00

Epoch 259 / 300: Loss = 0.107 Acc = 1.00

Epoch 260 / 300: Loss = 0.106 Acc = 1.00

Epoch 261 / 300: Loss = 0.105 Acc = 1.00

Epoch 262 / 300: Loss = 0.104 Acc = 1.00

Epoch 263 / 300: Loss = 0.104 Acc = 1.00

Epoch 264 / 300: Loss = 0.103 Acc = 1.00

Epoch 265 / 300: Loss = 0.102 Acc = 1.00

Epoch 266 / 300: Loss = 0.101 Acc = 1.00

Epoch 267 / 300: Loss = 0.100 Acc = 1.00

Epoch 268 / 300: Loss = 0.099 Acc = 1.00

Epoch 269 / 300: Loss = 0.099 Acc = 1.00

Epoch 270 / 300: Loss = 0.098 Acc = 1.00

Epoch 271 / 300: Loss = 0.097 Acc = 1.00

Epoch 272 / 300: Loss = 0.096 Acc = 1.00

Epoch 273 / 300: Loss = 0.096 Acc = 1.00

Epoch 274 / 300: Loss = 0.095 Acc = 1.00

Epoch 275 / 300: Loss = 0.094 Acc = 1.00

Epoch 276 / 300: Loss = 0.093 Acc = 1.00

Epoch 277 / 300: Loss = 0.093 Acc = 1.00

Epoch 278 / 300: Loss = 0.092 Acc = 1.00

Epoch 279 / 300: Loss = 0.091 Acc = 1.00

Epoch 280 / 300: Loss = 0.090 Acc = 1.00

Epoch 281 / 300: Loss = 0.090 Acc = 1.00

Epoch 282 / 300: Loss = 0.089 Acc = 1.00

Epoch 283 / 300: Loss = 0.088 Acc = 1.00

Epoch 284 / 300: Loss = 0.088 Acc = 1.00

Epoch 285 / 300: Loss = 0.087 Acc = 1.00

Epoch 286 / 300: Loss = 0.086 Acc = 1.00

Epoch 287 / 300: Loss = 0.086 Acc = 1.00

Epoch 288 / 300: Loss = 0.085 Acc = 1.00

Epoch 289 / 300: Loss = 0.084 Acc = 1.00

Epoch 290 / 300: Loss = 0.084 Acc = 1.00

Epoch 291 / 300: Loss = 0.083 Acc = 1.00

Epoch 292 / 300: Loss = 0.083 Acc = 1.00

Epoch 293 / 300: Loss = 0.082 Acc = 1.00

Epoch 294 / 300: Loss = 0.081 Acc = 1.00

Epoch 295 / 300: Loss = 0.081 Acc = 1.00

Epoch 296 / 300: Loss = 0.080 Acc = 1.00

Epoch 297 / 300: Loss = 0.080 Acc = 1.00

Epoch 298 / 300: Loss = 0.079 Acc = 1.00

Epoch 299 / 300: Loss = 0.078 Acc = 1.00

Epoch 300 / 300: Loss = 0.078 Acc = 1.00

Sentence: ['The', 'dog', 'ate', 'the', 'apple']

Labels: ['DET', 'NN', 'V', 'DET', 'NN']

Predicted: ['DET', 'NN', 'V', 'DET', 'NN']

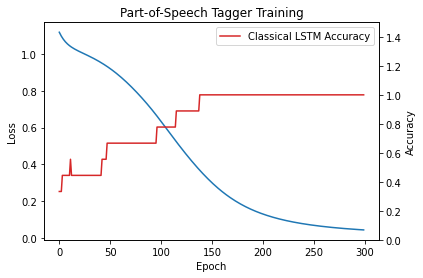

Let’s compare it with the classical version

[11]:

clstm = nn.LSTM(embedding_dim, hidden_dim, num_layers=n_layers)

trainer(clstm, embedding_dim, hidden_dim, n_epochs,model_label='Classical')

Epoch 1 / 300: Loss = 1.119 Acc = 0.33

Epoch 2 / 300: Loss = 1.108 Acc = 0.33

Epoch 3 / 300: Loss = 1.098 Acc = 0.33

Epoch 4 / 300: Loss = 1.089 Acc = 0.44

Epoch 5 / 300: Loss = 1.081 Acc = 0.44

Epoch 6 / 300: Loss = 1.073 Acc = 0.44

Epoch 7 / 300: Loss = 1.067 Acc = 0.44

Epoch 8 / 300: Loss = 1.061 Acc = 0.44

Epoch 9 / 300: Loss = 1.055 Acc = 0.44

Epoch 10 / 300: Loss = 1.051 Acc = 0.44

Epoch 11 / 300: Loss = 1.046 Acc = 0.44

Epoch 12 / 300: Loss = 1.042 Acc = 0.56

Epoch 13 / 300: Loss = 1.038 Acc = 0.44

Epoch 14 / 300: Loss = 1.034 Acc = 0.44

Epoch 15 / 300: Loss = 1.031 Acc = 0.44

Epoch 16 / 300: Loss = 1.028 Acc = 0.44

Epoch 17 / 300: Loss = 1.025 Acc = 0.44

Epoch 18 / 300: Loss = 1.022 Acc = 0.44

Epoch 19 / 300: Loss = 1.019 Acc = 0.44

Epoch 20 / 300: Loss = 1.016 Acc = 0.44

Epoch 21 / 300: Loss = 1.013 Acc = 0.44

Epoch 22 / 300: Loss = 1.011 Acc = 0.44

Epoch 23 / 300: Loss = 1.008 Acc = 0.44

Epoch 24 / 300: Loss = 1.005 Acc = 0.44

Epoch 25 / 300: Loss = 1.003 Acc = 0.44

Epoch 26 / 300: Loss = 1.000 Acc = 0.44

Epoch 27 / 300: Loss = 0.998 Acc = 0.44

Epoch 28 / 300: Loss = 0.995 Acc = 0.44

Epoch 29 / 300: Loss = 0.992 Acc = 0.44

Epoch 30 / 300: Loss = 0.990 Acc = 0.44

Epoch 31 / 300: Loss = 0.987 Acc = 0.44

Epoch 32 / 300: Loss = 0.984 Acc = 0.44

Epoch 33 / 300: Loss = 0.981 Acc = 0.44

Epoch 34 / 300: Loss = 0.978 Acc = 0.44

Epoch 35 / 300: Loss = 0.976 Acc = 0.44

Epoch 36 / 300: Loss = 0.973 Acc = 0.44

Epoch 37 / 300: Loss = 0.970 Acc = 0.44

Epoch 38 / 300: Loss = 0.967 Acc = 0.44

Epoch 39 / 300: Loss = 0.963 Acc = 0.44

Epoch 40 / 300: Loss = 0.960 Acc = 0.44

Epoch 41 / 300: Loss = 0.957 Acc = 0.44

Epoch 42 / 300: Loss = 0.954 Acc = 0.44

Epoch 43 / 300: Loss = 0.950 Acc = 0.56

Epoch 44 / 300: Loss = 0.947 Acc = 0.56

Epoch 45 / 300: Loss = 0.943 Acc = 0.56

Epoch 46 / 300: Loss = 0.940 Acc = 0.56

Epoch 47 / 300: Loss = 0.936 Acc = 0.56

Epoch 48 / 300: Loss = 0.932 Acc = 0.67

Epoch 49 / 300: Loss = 0.929 Acc = 0.67

Epoch 50 / 300: Loss = 0.925 Acc = 0.67

Epoch 51 / 300: Loss = 0.921 Acc = 0.67

Epoch 52 / 300: Loss = 0.917 Acc = 0.67

Epoch 53 / 300: Loss = 0.913 Acc = 0.67

Epoch 54 / 300: Loss = 0.909 Acc = 0.67

Epoch 55 / 300: Loss = 0.904 Acc = 0.67

Epoch 56 / 300: Loss = 0.900 Acc = 0.67

Epoch 57 / 300: Loss = 0.896 Acc = 0.67

Epoch 58 / 300: Loss = 0.891 Acc = 0.67

Epoch 59 / 300: Loss = 0.887 Acc = 0.67

Epoch 60 / 300: Loss = 0.882 Acc = 0.67

Epoch 61 / 300: Loss = 0.877 Acc = 0.67

Epoch 62 / 300: Loss = 0.872 Acc = 0.67

Epoch 63 / 300: Loss = 0.868 Acc = 0.67

Epoch 64 / 300: Loss = 0.863 Acc = 0.67

Epoch 65 / 300: Loss = 0.858 Acc = 0.67

Epoch 66 / 300: Loss = 0.853 Acc = 0.67

Epoch 67 / 300: Loss = 0.847 Acc = 0.67

Epoch 68 / 300: Loss = 0.842 Acc = 0.67

Epoch 69 / 300: Loss = 0.837 Acc = 0.67

Epoch 70 / 300: Loss = 0.831 Acc = 0.67

Epoch 71 / 300: Loss = 0.826 Acc = 0.67

Epoch 72 / 300: Loss = 0.821 Acc = 0.67

Epoch 73 / 300: Loss = 0.815 Acc = 0.67

Epoch 74 / 300: Loss = 0.809 Acc = 0.67

Epoch 75 / 300: Loss = 0.804 Acc = 0.67

Epoch 76 / 300: Loss = 0.798 Acc = 0.67

Epoch 77 / 300: Loss = 0.792 Acc = 0.67

Epoch 78 / 300: Loss = 0.786 Acc = 0.67

Epoch 79 / 300: Loss = 0.780 Acc = 0.67

Epoch 80 / 300: Loss = 0.774 Acc = 0.67

Epoch 81 / 300: Loss = 0.768 Acc = 0.67

Epoch 82 / 300: Loss = 0.762 Acc = 0.67

Epoch 83 / 300: Loss = 0.756 Acc = 0.67

Epoch 84 / 300: Loss = 0.750 Acc = 0.67

Epoch 85 / 300: Loss = 0.743 Acc = 0.67

Epoch 86 / 300: Loss = 0.737 Acc = 0.67

Epoch 87 / 300: Loss = 0.730 Acc = 0.67

Epoch 88 / 300: Loss = 0.724 Acc = 0.67

Epoch 89 / 300: Loss = 0.717 Acc = 0.67

Epoch 90 / 300: Loss = 0.711 Acc = 0.67

Epoch 91 / 300: Loss = 0.704 Acc = 0.67

Epoch 92 / 300: Loss = 0.697 Acc = 0.67

Epoch 93 / 300: Loss = 0.690 Acc = 0.67

Epoch 94 / 300: Loss = 0.683 Acc = 0.67

Epoch 95 / 300: Loss = 0.676 Acc = 0.67

Epoch 96 / 300: Loss = 0.669 Acc = 0.67

Epoch 97 / 300: Loss = 0.662 Acc = 0.78

Epoch 98 / 300: Loss = 0.655 Acc = 0.78

Epoch 99 / 300: Loss = 0.648 Acc = 0.78

Epoch 100 / 300: Loss = 0.640 Acc = 0.78

Epoch 101 / 300: Loss = 0.633 Acc = 0.78

Epoch 102 / 300: Loss = 0.626 Acc = 0.78

Epoch 103 / 300: Loss = 0.618 Acc = 0.78

Epoch 104 / 300: Loss = 0.611 Acc = 0.78

Epoch 105 / 300: Loss = 0.604 Acc = 0.78

Epoch 106 / 300: Loss = 0.596 Acc = 0.78

Epoch 107 / 300: Loss = 0.589 Acc = 0.78

Epoch 108 / 300: Loss = 0.581 Acc = 0.78

Epoch 109 / 300: Loss = 0.574 Acc = 0.78

Epoch 110 / 300: Loss = 0.567 Acc = 0.78

Epoch 111 / 300: Loss = 0.559 Acc = 0.78

Epoch 112 / 300: Loss = 0.552 Acc = 0.78

Epoch 113 / 300: Loss = 0.544 Acc = 0.78

Epoch 114 / 300: Loss = 0.537 Acc = 0.78

Epoch 115 / 300: Loss = 0.530 Acc = 0.78

Epoch 116 / 300: Loss = 0.523 Acc = 0.89

Epoch 117 / 300: Loss = 0.515 Acc = 0.89

Epoch 118 / 300: Loss = 0.508 Acc = 0.89

Epoch 119 / 300: Loss = 0.501 Acc = 0.89

Epoch 120 / 300: Loss = 0.494 Acc = 0.89

Epoch 121 / 300: Loss = 0.487 Acc = 0.89

Epoch 122 / 300: Loss = 0.480 Acc = 0.89

Epoch 123 / 300: Loss = 0.473 Acc = 0.89

Epoch 124 / 300: Loss = 0.466 Acc = 0.89

Epoch 125 / 300: Loss = 0.459 Acc = 0.89

Epoch 126 / 300: Loss = 0.452 Acc = 0.89

Epoch 127 / 300: Loss = 0.445 Acc = 0.89

Epoch 128 / 300: Loss = 0.438 Acc = 0.89

Epoch 129 / 300: Loss = 0.431 Acc = 0.89

Epoch 130 / 300: Loss = 0.425 Acc = 0.89

Epoch 131 / 300: Loss = 0.418 Acc = 0.89

Epoch 132 / 300: Loss = 0.412 Acc = 0.89

Epoch 133 / 300: Loss = 0.405 Acc = 0.89

Epoch 134 / 300: Loss = 0.399 Acc = 0.89

Epoch 135 / 300: Loss = 0.392 Acc = 0.89

Epoch 136 / 300: Loss = 0.386 Acc = 0.89

Epoch 137 / 300: Loss = 0.380 Acc = 0.89

Epoch 138 / 300: Loss = 0.373 Acc = 0.89

Epoch 139 / 300: Loss = 0.367 Acc = 1.00

Epoch 140 / 300: Loss = 0.361 Acc = 1.00

Epoch 141 / 300: Loss = 0.355 Acc = 1.00

Epoch 142 / 300: Loss = 0.349 Acc = 1.00

Epoch 143 / 300: Loss = 0.343 Acc = 1.00

Epoch 144 / 300: Loss = 0.338 Acc = 1.00

Epoch 145 / 300: Loss = 0.332 Acc = 1.00

Epoch 146 / 300: Loss = 0.326 Acc = 1.00

Epoch 147 / 300: Loss = 0.321 Acc = 1.00

Epoch 148 / 300: Loss = 0.315 Acc = 1.00

Epoch 149 / 300: Loss = 0.310 Acc = 1.00

Epoch 150 / 300: Loss = 0.305 Acc = 1.00

Epoch 151 / 300: Loss = 0.299 Acc = 1.00

Epoch 152 / 300: Loss = 0.294 Acc = 1.00

Epoch 153 / 300: Loss = 0.289 Acc = 1.00

Epoch 154 / 300: Loss = 0.284 Acc = 1.00

Epoch 155 / 300: Loss = 0.279 Acc = 1.00

Epoch 156 / 300: Loss = 0.274 Acc = 1.00

Epoch 157 / 300: Loss = 0.269 Acc = 1.00

Epoch 158 / 300: Loss = 0.265 Acc = 1.00

Epoch 159 / 300: Loss = 0.260 Acc = 1.00

Epoch 160 / 300: Loss = 0.256 Acc = 1.00

Epoch 161 / 300: Loss = 0.251 Acc = 1.00

Epoch 162 / 300: Loss = 0.247 Acc = 1.00

Epoch 163 / 300: Loss = 0.242 Acc = 1.00

Epoch 164 / 300: Loss = 0.238 Acc = 1.00

Epoch 165 / 300: Loss = 0.234 Acc = 1.00

Epoch 166 / 300: Loss = 0.230 Acc = 1.00

Epoch 167 / 300: Loss = 0.226 Acc = 1.00

Epoch 168 / 300: Loss = 0.222 Acc = 1.00

Epoch 169 / 300: Loss = 0.218 Acc = 1.00

Epoch 170 / 300: Loss = 0.214 Acc = 1.00

Epoch 171 / 300: Loss = 0.211 Acc = 1.00

Epoch 172 / 300: Loss = 0.207 Acc = 1.00

Epoch 173 / 300: Loss = 0.204 Acc = 1.00

Epoch 174 / 300: Loss = 0.200 Acc = 1.00

Epoch 175 / 300: Loss = 0.197 Acc = 1.00

Epoch 176 / 300: Loss = 0.193 Acc = 1.00

Epoch 177 / 300: Loss = 0.190 Acc = 1.00

Epoch 178 / 300: Loss = 0.187 Acc = 1.00

Epoch 179 / 300: Loss = 0.184 Acc = 1.00

Epoch 180 / 300: Loss = 0.181 Acc = 1.00

Epoch 181 / 300: Loss = 0.178 Acc = 1.00

Epoch 182 / 300: Loss = 0.175 Acc = 1.00

Epoch 183 / 300: Loss = 0.172 Acc = 1.00

Epoch 184 / 300: Loss = 0.169 Acc = 1.00

Epoch 185 / 300: Loss = 0.166 Acc = 1.00

Epoch 186 / 300: Loss = 0.164 Acc = 1.00

Epoch 187 / 300: Loss = 0.161 Acc = 1.00

Epoch 188 / 300: Loss = 0.158 Acc = 1.00

Epoch 189 / 300: Loss = 0.156 Acc = 1.00

Epoch 190 / 300: Loss = 0.153 Acc = 1.00

Epoch 191 / 300: Loss = 0.151 Acc = 1.00

Epoch 192 / 300: Loss = 0.148 Acc = 1.00

Epoch 193 / 300: Loss = 0.146 Acc = 1.00

Epoch 194 / 300: Loss = 0.144 Acc = 1.00

Epoch 195 / 300: Loss = 0.142 Acc = 1.00

Epoch 196 / 300: Loss = 0.139 Acc = 1.00

Epoch 197 / 300: Loss = 0.137 Acc = 1.00

Epoch 198 / 300: Loss = 0.135 Acc = 1.00

Epoch 199 / 300: Loss = 0.133 Acc = 1.00

Epoch 200 / 300: Loss = 0.131 Acc = 1.00

Epoch 201 / 300: Loss = 0.129 Acc = 1.00

Epoch 202 / 300: Loss = 0.127 Acc = 1.00

Epoch 203 / 300: Loss = 0.125 Acc = 1.00

Epoch 204 / 300: Loss = 0.123 Acc = 1.00

Epoch 205 / 300: Loss = 0.122 Acc = 1.00

Epoch 206 / 300: Loss = 0.120 Acc = 1.00

Epoch 207 / 300: Loss = 0.118 Acc = 1.00

Epoch 208 / 300: Loss = 0.116 Acc = 1.00

Epoch 209 / 300: Loss = 0.115 Acc = 1.00

Epoch 210 / 300: Loss = 0.113 Acc = 1.00

Epoch 211 / 300: Loss = 0.111 Acc = 1.00

Epoch 212 / 300: Loss = 0.110 Acc = 1.00

Epoch 213 / 300: Loss = 0.108 Acc = 1.00

Epoch 214 / 300: Loss = 0.107 Acc = 1.00

Epoch 215 / 300: Loss = 0.105 Acc = 1.00

Epoch 216 / 300: Loss = 0.104 Acc = 1.00

Epoch 217 / 300: Loss = 0.102 Acc = 1.00

Epoch 218 / 300: Loss = 0.101 Acc = 1.00

Epoch 219 / 300: Loss = 0.100 Acc = 1.00

Epoch 220 / 300: Loss = 0.098 Acc = 1.00

Epoch 221 / 300: Loss = 0.097 Acc = 1.00

Epoch 222 / 300: Loss = 0.096 Acc = 1.00

Epoch 223 / 300: Loss = 0.094 Acc = 1.00

Epoch 224 / 300: Loss = 0.093 Acc = 1.00

Epoch 225 / 300: Loss = 0.092 Acc = 1.00

Epoch 226 / 300: Loss = 0.091 Acc = 1.00

Epoch 227 / 300: Loss = 0.090 Acc = 1.00

Epoch 228 / 300: Loss = 0.089 Acc = 1.00

Epoch 229 / 300: Loss = 0.087 Acc = 1.00

Epoch 230 / 300: Loss = 0.086 Acc = 1.00

Epoch 231 / 300: Loss = 0.085 Acc = 1.00

Epoch 232 / 300: Loss = 0.084 Acc = 1.00

Epoch 233 / 300: Loss = 0.083 Acc = 1.00

Epoch 234 / 300: Loss = 0.082 Acc = 1.00

Epoch 235 / 300: Loss = 0.081 Acc = 1.00

Epoch 236 / 300: Loss = 0.080 Acc = 1.00

Epoch 237 / 300: Loss = 0.079 Acc = 1.00

Epoch 238 / 300: Loss = 0.078 Acc = 1.00

Epoch 239 / 300: Loss = 0.077 Acc = 1.00

Epoch 240 / 300: Loss = 0.076 Acc = 1.00

Epoch 241 / 300: Loss = 0.076 Acc = 1.00

Epoch 242 / 300: Loss = 0.075 Acc = 1.00

Epoch 243 / 300: Loss = 0.074 Acc = 1.00

Epoch 244 / 300: Loss = 0.073 Acc = 1.00

Epoch 245 / 300: Loss = 0.072 Acc = 1.00

Epoch 246 / 300: Loss = 0.071 Acc = 1.00

Epoch 247 / 300: Loss = 0.071 Acc = 1.00

Epoch 248 / 300: Loss = 0.070 Acc = 1.00

Epoch 249 / 300: Loss = 0.069 Acc = 1.00

Epoch 250 / 300: Loss = 0.068 Acc = 1.00

Epoch 251 / 300: Loss = 0.067 Acc = 1.00

Epoch 252 / 300: Loss = 0.067 Acc = 1.00

Epoch 253 / 300: Loss = 0.066 Acc = 1.00

Epoch 254 / 300: Loss = 0.065 Acc = 1.00

Epoch 255 / 300: Loss = 0.065 Acc = 1.00

Epoch 256 / 300: Loss = 0.064 Acc = 1.00

Epoch 257 / 300: Loss = 0.063 Acc = 1.00

Epoch 258 / 300: Loss = 0.063 Acc = 1.00

Epoch 259 / 300: Loss = 0.062 Acc = 1.00

Epoch 260 / 300: Loss = 0.061 Acc = 1.00

Epoch 261 / 300: Loss = 0.061 Acc = 1.00

Epoch 262 / 300: Loss = 0.060 Acc = 1.00

Epoch 263 / 300: Loss = 0.059 Acc = 1.00

Epoch 264 / 300: Loss = 0.059 Acc = 1.00

Epoch 265 / 300: Loss = 0.058 Acc = 1.00

Epoch 266 / 300: Loss = 0.058 Acc = 1.00

Epoch 267 / 300: Loss = 0.057 Acc = 1.00

Epoch 268 / 300: Loss = 0.057 Acc = 1.00

Epoch 269 / 300: Loss = 0.056 Acc = 1.00

Epoch 270 / 300: Loss = 0.055 Acc = 1.00

Epoch 271 / 300: Loss = 0.055 Acc = 1.00

Epoch 272 / 300: Loss = 0.054 Acc = 1.00

Epoch 273 / 300: Loss = 0.054 Acc = 1.00

Epoch 274 / 300: Loss = 0.053 Acc = 1.00

Epoch 275 / 300: Loss = 0.053 Acc = 1.00

Epoch 276 / 300: Loss = 0.052 Acc = 1.00

Epoch 277 / 300: Loss = 0.052 Acc = 1.00

Epoch 278 / 300: Loss = 0.051 Acc = 1.00

Epoch 279 / 300: Loss = 0.051 Acc = 1.00

Epoch 280 / 300: Loss = 0.050 Acc = 1.00

Epoch 281 / 300: Loss = 0.050 Acc = 1.00

Epoch 282 / 300: Loss = 0.050 Acc = 1.00

Epoch 283 / 300: Loss = 0.049 Acc = 1.00

Epoch 284 / 300: Loss = 0.049 Acc = 1.00

Epoch 285 / 300: Loss = 0.048 Acc = 1.00

Epoch 286 / 300: Loss = 0.048 Acc = 1.00

Epoch 287 / 300: Loss = 0.047 Acc = 1.00

Epoch 288 / 300: Loss = 0.047 Acc = 1.00

Epoch 289 / 300: Loss = 0.047 Acc = 1.00

Epoch 290 / 300: Loss = 0.046 Acc = 1.00

Epoch 291 / 300: Loss = 0.046 Acc = 1.00

Epoch 292 / 300: Loss = 0.045 Acc = 1.00

Epoch 293 / 300: Loss = 0.045 Acc = 1.00

Epoch 294 / 300: Loss = 0.045 Acc = 1.00

Epoch 295 / 300: Loss = 0.044 Acc = 1.00

Epoch 296 / 300: Loss = 0.044 Acc = 1.00

Epoch 297 / 300: Loss = 0.044 Acc = 1.00

Epoch 298 / 300: Loss = 0.043 Acc = 1.00

Epoch 299 / 300: Loss = 0.043 Acc = 1.00

Epoch 300 / 300: Loss = 0.043 Acc = 1.00

Sentence: ['The', 'dog', 'ate', 'the', 'apple']

Labels: ['DET', 'NN', 'V', 'DET', 'NN']

Predicted: ['DET', 'NN', 'V', 'DET', 'NN']

In case you want to try with a different backend simulator you could also install pennylane-qiskit and play around with qiskit.aer or qiskit.qasm. Be aware that it’s going to be way slower because of qiskit simulators.